GPT-5 and Research

A while back when GPT-4 hadn’t been out long I asked it a question. I don’t remember the exact question, but something like:

What have been the trends in tropical cyclones hitting the East Coast of Australia?

The answer came back immediately, with the characteristic “on the one hand, on the other hand” style of GPT-4, but it was fairly certain that they were getting worse.

When GPT-5 came out I asked a similar but more specific question:

What is the long term trend in severe tropical cyclones hitting the east coast of Australia?

The result surprised me. First of all, “Thinking.. checking reliable sources..”. I thought maybe marketing, like the Microsoft troubleshooter which in all the years I tried it had some kind of progress bar for 1-2 minutes followed by: “No idea”.

But it’s not marketing.

Short answer: down.

Here’s the big picture on severe (AUS Cat 3–5) tropical cyclones that actually cross the east coast (mainly QLD, occasionally NSW):

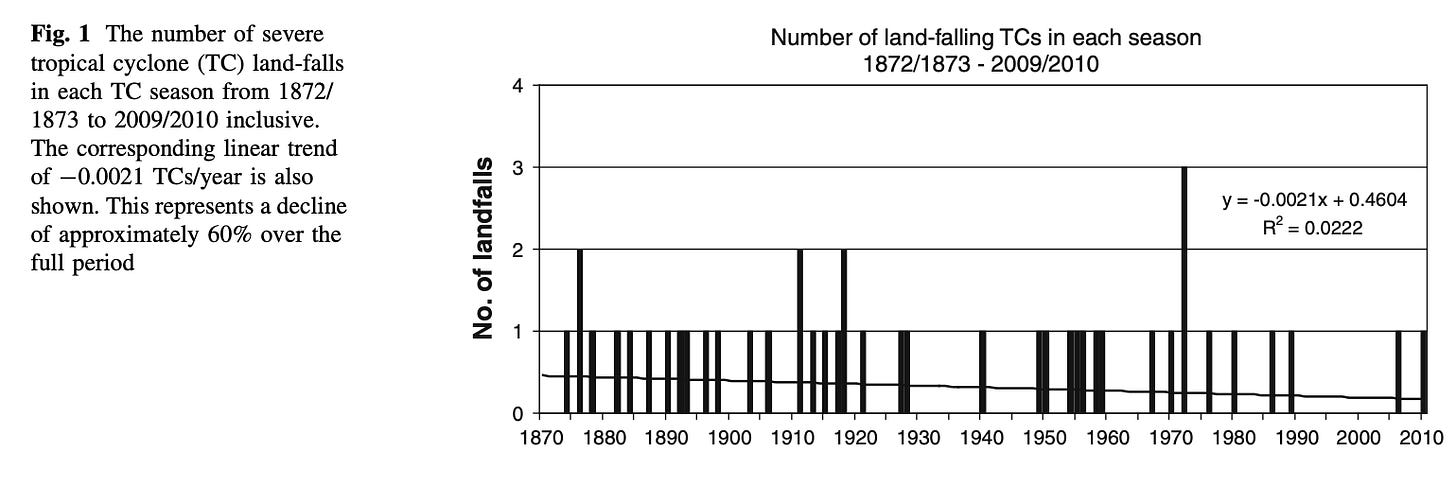

- Long-term landfall trend: Using a 150-year record, severe east-coast landfalls have declined by ~62%**—from ~0.45 per year in the early 1870s to ~0.17 per year recently. That dataset also shows big swings decade-to-decade.

Wow. And it cited Callaghan and Power (2011), the paper referenced on this topic in chapter 11 - Extreme Weather - of the IPCC Sixth Assessment Report (AR6).

Here’s the graph from their paper:

There’s a big difference between GPT-4 and GPT-5. With a small amount of technical knowledge of LLMs (large language models), but definitely not enough, here’s my take on the two.

GPT-4 spits out the “average” of everything on the internet - it was trained by feeding in some sizeable chunk of the whole internet into an algorithm that lets it spit out plausible next words. It’s wonderful at making coherent sentences and creating whole essays, but if the information on the internet has been overwhelmed by PR, or pop pyschology, then that’s going to be where your answer lies.

I did ask GPT-4 how scientists in the late 1700s and early 1800s starting figuring out that there were elements. It was amazing at that. There are lots of university sites where someone has explained different aspects of that history. Each one had some blanks for me, some unexplained area where I would think:

How did they get from a to b?

How did they measure the amounts? Atoms are tiny, they didn’t have accurate enough instruments..

And so on. But using GPT-4, I could keep asking and drilling down and I got answers. No one has overwhelmed the internet with PR fluff about chemistry in the 1800s so the results are pretty solid.

Many people are aware that GPT-4 used to “hallucinate”. If you asked it some question and for references, it would often invent the references. My mental model is like this - if you’ve asked a question about say cholesterol and health and asked for a reference, you might get something like: “Journal of …”, Peter Smith, 2012. Because those words showed up more than other words in articles about cholesterol. But if you went looking for that paper it didn’t exist. The journal often didn’t exist.

GPT-5 is a whole different world. It’s like having a smart graduate student sitting next to you. But a graduate student in every field.

What appears to happen in GPT-5 is your question (along with previous relevant questions you’ve asked) is turned into “stuff to look up”, then these papers or technical resources are fed into the LLM and the instructions you included at the beginning are used to prepare the answer.

For example:

Explain the Emanuel theory on maximum potential intensity of tropical cyclones

What’s the state of the art in computational methods of identifying binding sites on proteins?

Tell me about the Dvorak technique from satellite for tropical cyclones

You’ll get a well structured summary with references, especially if you ask for them. I get references with everything but that might be because GPT-5 has learnt that I want them. You can then ask GPT-5 to give you a 500 word summary of a paper, or 1,000 words - or whatever you want. If you don’t understand the answer you ask more questions: “Please explain it as if I was a beginner in this field”.

Here’s one where you might get low quality studies if you don’t ask specific questions, so it’s all about learning how to phrase the question:

Summarise any large scale studies looking at health outcomes for men, for those who have no exercise, some exercise and high levels of exercise

The answer was pretty good. But then I dug a little more:

Are there any attempts to pick out causality in these studies?

GPT-5 understands what I’m looking for, dismisses that first group of studies, finds some others that attempted to identify causality and explains the results didn’t look nearly as good.

I asked it about communications from deep space missions - current bandwidth, what potentials for improvement, power budget, costs - it produced all of that with plenty of details. It gave all the details on the new (for space) technology of infra red laser comms and the test missions. It did link budgets (antenna gains, transmitter power, losses, signal to noise at the receiver, etc). “Would you like a clean procurement spec?” I declined, as I don’t have a mission going to Mars and am short the half billion needed. But nice that it asked.

For bonus points, I asked GPT-5 for a summary of all trends in tropical cyclones. Most people would be surprised at the results. I thought it scored 90 out of 100, then afterwards wondered if GPT-5 actually got 100 out of 100 and it was my understanding that was a little deficient. I’m guessing the latter.

I’m using the paid version, it costs a few cups of good coffee a month and is well worth it. I don’t know how good the free version is.

For anyone who has questions about the world we live in, I strongly recommend the subscription version of GPT-5. I hardly use Google anymore.

A friend who is in medical research started using MS Copilot in the last week. He’s amazed.

I haven’t used Copilot but I love the name.

That’s how I think about GPT-5 - a great resource that helps me to get where I’d like to land - about 5-10x faster than before.